How to Invent a Method of Forensic Linguistic Analysis

As I've mentioned before, I am writing my master's thesis on a method of linguistic demographic profiling through analysis of native language transference... a specific method that I am in the process of inventing. Not from whole cloth, of course. The potential method I have developed is an amalgamation of established forensic linguistic techniques and contemporary research in machine-based language processing and computational linguistic analysis. I am calling this method "Native Language Analysis," as my advisor suggested. (I wanted to call it native language deduction for the Holmesian connotations, but she said no.) I am designing this proposed method of linguistic demographic profiling specifically for native language diagnosis and supporting it with quantified statistical data.

Without getting too deep into sociolinguistic philosophy, the concept central to my research is that - like spoken accents - native speakers of a particular language frequently produce similar, predictable nonstandard constructions when writing in a particular foreign language. That is to say that you could potentially deduce someone's first language (L1) based on the choices they make when writing in a secondary language (L2), especially if their linguistic choices correspond to those frequently made by confirmed speakers of their L1. These choices result in language elements that are referred to as cross-linguistic transference features, interlingual transfer features, interlingual errors, and similar permutations of these terms.

My ultimate goal for Native Language Analysis is to see it replace current standards of native language profiling. Determining an anonymous author's native language is typically a matter of relying on the experience of the investigating linguist with the questioned language, or the consultation of experts. In cases where at least one suspect is a non-native English speaker, language evidence might be examined for transference features indicative of that suspect's L1. These are just some common responses to non-native language evidence, of course, if native language identification is attempted at all. Frequently, investigators postulate no further than profiling an author as either a native speaker or not. Though this is typically considered sufficient, it is, in my opinion, far too subjective for forensic investigations where actual lives and freedoms may be at stake.

The solution to this subjectivity is data. Such data exists, scattered throughout the literature as it may be. I believe that such data can be harnessed to bring more scientifically-sound objectivity to forensic linguistic investigation. Hypothetically, NLA will accomplish this by providing a framework for the identification of transference features and the comparative analyses of those features and frequencies against a reference catalogue of cross-linguistic transference features.

For this reason, my thesis is only partly a study on the practicality of such a catalogue as well as a technique based on it. In order to test the feasibility of a feature-based, language-index reference catalogue, I have to compile this catalogue first. The prototype catalogue designed for my thesis is comprised of statistically quantified features, including data for languages in which I have no education and therefore cannot comprehend. For now, the prototype catalogue is primarily organized by language, with a sub-catalogue for each language.

For deadline reasons, the prototype catalogue is limited to four languages: Spanish, Arabic, Russian, and German. Of those, I am fluent in Spanish and know basically nothing about the linguistics of Arabic, Russian, or German. The inclusion of languages that I am unfamiliar with will be a test of NLA's potential to reduce the aforementioned reliance on an investigating linguist's intuition.

Keeping with my goals of objectivity, each language's sub-catalogue comprises twelve transfer features determined to be, quite mathematically, the most common transference features. The choice to limit each index to twelve features is inspired by the fact that several source studies concluded that testing for at most twelve features produced the most reliable results in automated feature-based analyses. Additionally, twelve features should capture a decent range of any language features that might appear in real evidence while still being a manageable number for an investigator to consider.

The "top twelve" features for each sub-catalogue are arranged according to my best efforts to collectively weight the transference features reported in the source literature that I reference. I reference so many studies. So many. The studies I review include several papers published by researchers from different academic disciplines, each of whom frequently have their own conventions for labeling and presenting their data. Which is why my biggest challenge has been to compile, corroborate, and organize statistical data from all of these studies. Sometimes it is little differences - such as one paper broadly categorizing some features as "determiner errors" where another paper further divides their feature categories into "indefinite articles," "relative pronouns," etc. I am doing my best to reconcile this discord in reported data. It's just a tediously difficult task. If I had funding, I would make undergraduate research assistants do it. I don't have funding though.

My prototype catalogue is not perfect (maybe if I had those undergrads...). It would be greatly improved if it were informed by corpus analyses specifically conducted to identify cross-linguistic transfer features and relevant statistical data. Perhaps after I publish my thesis, other researchers who have the training and resources will be inspired to do such analyses and share their own take on an NLA catalogue.

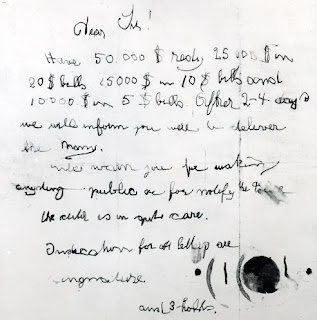

Despite the challenges and setbacks I've faced this year, my prototype catalogue - and my thesis - is mostly finished. In fact, I already completed the sub-catalogue of Arabic transfer features and have even tested it on language evidence from an infamous closed case. So, next week's blog post will be a (lightly edited) except from my thesis so that I can share the Arabic language portion of my prototype catalogue. I especially want to share my methods and results in using the catalogue of Arabic transference features to cross-analyzing real forensic linguistic evidence.

Spoiler alert: The results look promising!

Without getting too deep into sociolinguistic philosophy, the concept central to my research is that - like spoken accents - native speakers of a particular language frequently produce similar, predictable nonstandard constructions when writing in a particular foreign language. That is to say that you could potentially deduce someone's first language (L1) based on the choices they make when writing in a secondary language (L2), especially if their linguistic choices correspond to those frequently made by confirmed speakers of their L1. These choices result in language elements that are referred to as cross-linguistic transference features, interlingual transfer features, interlingual errors, and similar permutations of these terms.

My ultimate goal for Native Language Analysis is to see it replace current standards of native language profiling. Determining an anonymous author's native language is typically a matter of relying on the experience of the investigating linguist with the questioned language, or the consultation of experts. In cases where at least one suspect is a non-native English speaker, language evidence might be examined for transference features indicative of that suspect's L1. These are just some common responses to non-native language evidence, of course, if native language identification is attempted at all. Frequently, investigators postulate no further than profiling an author as either a native speaker or not. Though this is typically considered sufficient, it is, in my opinion, far too subjective for forensic investigations where actual lives and freedoms may be at stake.

The solution to this subjectivity is data. Such data exists, scattered throughout the literature as it may be. I believe that such data can be harnessed to bring more scientifically-sound objectivity to forensic linguistic investigation. Hypothetically, NLA will accomplish this by providing a framework for the identification of transference features and the comparative analyses of those features and frequencies against a reference catalogue of cross-linguistic transference features.

For this reason, my thesis is only partly a study on the practicality of such a catalogue as well as a technique based on it. In order to test the feasibility of a feature-based, language-index reference catalogue, I have to compile this catalogue first. The prototype catalogue designed for my thesis is comprised of statistically quantified features, including data for languages in which I have no education and therefore cannot comprehend. For now, the prototype catalogue is primarily organized by language, with a sub-catalogue for each language.

For deadline reasons, the prototype catalogue is limited to four languages: Spanish, Arabic, Russian, and German. Of those, I am fluent in Spanish and know basically nothing about the linguistics of Arabic, Russian, or German. The inclusion of languages that I am unfamiliar with will be a test of NLA's potential to reduce the aforementioned reliance on an investigating linguist's intuition.

Keeping with my goals of objectivity, each language's sub-catalogue comprises twelve transfer features determined to be, quite mathematically, the most common transference features. The choice to limit each index to twelve features is inspired by the fact that several source studies concluded that testing for at most twelve features produced the most reliable results in automated feature-based analyses. Additionally, twelve features should capture a decent range of any language features that might appear in real evidence while still being a manageable number for an investigator to consider.

The "top twelve" features for each sub-catalogue are arranged according to my best efforts to collectively weight the transference features reported in the source literature that I reference. I reference so many studies. So many. The studies I review include several papers published by researchers from different academic disciplines, each of whom frequently have their own conventions for labeling and presenting their data. Which is why my biggest challenge has been to compile, corroborate, and organize statistical data from all of these studies. Sometimes it is little differences - such as one paper broadly categorizing some features as "determiner errors" where another paper further divides their feature categories into "indefinite articles," "relative pronouns," etc. I am doing my best to reconcile this discord in reported data. It's just a tediously difficult task. If I had funding, I would make undergraduate research assistants do it. I don't have funding though.

My prototype catalogue is not perfect (maybe if I had those undergrads...). It would be greatly improved if it were informed by corpus analyses specifically conducted to identify cross-linguistic transfer features and relevant statistical data. Perhaps after I publish my thesis, other researchers who have the training and resources will be inspired to do such analyses and share their own take on an NLA catalogue.

Despite the challenges and setbacks I've faced this year, my prototype catalogue - and my thesis - is mostly finished. In fact, I already completed the sub-catalogue of Arabic transfer features and have even tested it on language evidence from an infamous closed case. So, next week's blog post will be a (lightly edited) except from my thesis so that I can share the Arabic language portion of my prototype catalogue. I especially want to share my methods and results in using the catalogue of Arabic transference features to cross-analyzing real forensic linguistic evidence.

Spoiler alert: The results look promising!

Comments

Post a Comment