Native Language Analysis for Arabic Transference Features in the Daniel Pearl Abduction Emails

In my thesis, I hypothesize that forensic linguistic techniques of linguistic demographic profiling can be honed into a method of native language analysis that is supported by quantified language transference data. My previous post explains how I set out to develop Native Language Analysis (NLA) as just such a method, and I strongly suggest you read it first. To summarize, I am essentially expanding on the established techniques of author profiling by incorporating quantified language data to connect interlanguage transference features to the native languages that likely inspired them.

As promised, this post is based on the sections of my thesis where I test my hypothetical method by using it to analyze the language evidence from real forensic linguistic cases. One of the languages I catalogued data for was Arabic. The evidence I analyzed comes from the case of Daniel Pearl's abduction.

Daniel Pearl was a

journalist for The Wall Street Journal

on assignment in Pakistan when he was lured into an abduction in Karachi on

January 23, 2002. In the days following, Pearl’s kidnappers sent two emails to

several newspaper editors, editorials, and publishers. Pearl was executed by

his abductors on February 2, a few days after the second email was delivered.

The parties responsible for kidnapping and murdering Pearl were eventually identified and arrested. They were Pakistani operatives of Al-Qaeda and native speakers of Arabic. The text of the two emails were extracted from published transcriptions and comprise an evidence corpus of approximately 303 words. This evidence corpus is examined using the prototype Arabic transference catalog and the NLA methods of my thesis.

A total of 19 error types were identified and tagged. The table below describes the 12 most frequent error types identified within the evidence corpus. Like the NLA feature catalog, the errors are organized according to frequency of occurrence.

As promised, this post is based on the sections of my thesis where I test my hypothetical method by using it to analyze the language evidence from real forensic linguistic cases. One of the languages I catalogued data for was Arabic. The evidence I analyzed comes from the case of Daniel Pearl's abduction.

|

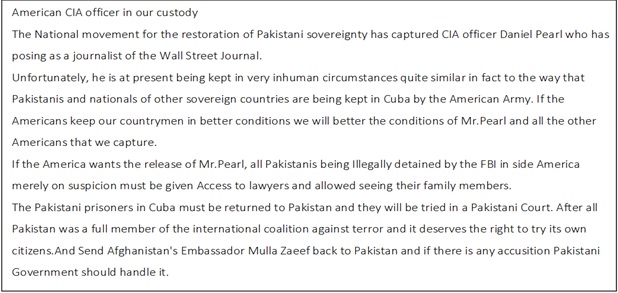

| Text of Email 1 |

The parties responsible for kidnapping and murdering Pearl were eventually identified and arrested. They were Pakistani operatives of Al-Qaeda and native speakers of Arabic. The text of the two emails were extracted from published transcriptions and comprise an evidence corpus of approximately 303 words. This evidence corpus is examined using the prototype Arabic transference catalog and the NLA methods of my thesis.

A total of 19 error types were identified and tagged. The table below describes the 12 most frequent error types identified within the evidence corpus. Like the NLA feature catalog, the errors are organized according to frequency of occurrence.

These results demonstrate that 75% of the transference

features observed in the evidence corpus were predicted by the prototype Arabic

feature catalog. Of the 12 most frequent error types, nine are consistent with those in the catalog.

Most errors are not in the order of frequency predicted by

the Arabic feature catalog, as described by the final column. For example,

Spelling-Choice errors are ranked 1 in both the catalog and evidence corpus,

while Vocab-Choice errors are ranked 6 in the evidence corpus, but rank 11 in

the feature catalog.

Three of the 12 most frequent transfer feature types

identified in this evidence corpus are not predicted by the prototype catalog: Punctuation-Order (2), Connector-Overuse (8), and Verb Phrase-Choice

(9). Interestingly, Connector-Overuse errors might be related to run-on

sentence formation, which is a transference that was not ranked among the most

frequent 12, but was noted by two other papers my research references (Sawalmeh, 2013 and Al-Buainain, 2007). Both Capitalization-Overuse (4) and Capitalization-Underuse (7) are counted as

concurring with Capitalization-Underuse in the Arabic catalog, as the overuse

of capitalization may be the result of hypercorrection on the part of the

writer. Additionally, dialectal variation is also a potential

factor not accounted for here. Finally, some of these discrepancies might be attributed to stylistic

choices of authorship or my interpretation of the literature;

this is another potentially limiting factor.

The writers of the emails were confirmed to be native speakers of Arabic. Considering the conclusive identification of the authors and the relative concordance between the transference errors coded in the corpus and those in the Arabic feature catalog, I believe this a tentatively successful test of the potential for referencing organized language feature data in order to predict author native language. Since this test was conducted like a NLA in reverse, the 75% prediction rate seems promising. With dedicated resources, an even better catalog would likely be more conclusive. As they say, more research is needed.

The writers of the emails were confirmed to be native speakers of Arabic. Considering the conclusive identification of the authors and the relative concordance between the transference errors coded in the corpus and those in the Arabic feature catalog, I believe this a tentatively successful test of the potential for referencing organized language feature data in order to predict author native language. Since this test was conducted like a NLA in reverse, the 75% prediction rate seems promising. With dedicated resources, an even better catalog would likely be more conclusive. As they say, more research is needed.

Comments

Post a Comment